http://www.umn.edu/urelate

612-624-6868

Spring Research Day 2012

Friday, April 27th, 2012

President's Room, Coffman Memorial Union

Featuring

invited talks by Josh Tenenbaum (MIT) and Mauricio Delgado (Rutgers) and presentations of cutting edge research by graduate students in the cognitive sciences.

Program

| 9:00 ~ 9:10 |

|

Dan Kersten, Opening Remarks |

| 9:10 ~ 9:30 |

|

Sabine Doebel Bottom-up influences of language on executive function in early childhood. |

| 9:30 ~ 9:50 |

|

Shane Hoversten |

| 9:50 ~ 10:00 |

|

Break |

| 10:00 ~ 11:00 |

|

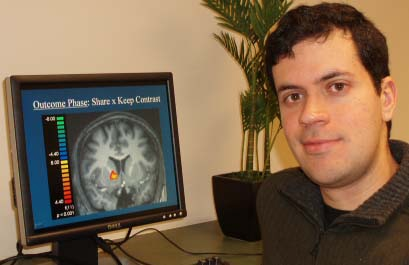

Mauricio Delgado,

Invited Speaker The successful implementation of cognitive strategies to regulate emotional responses has been a topic of great interest in cognitive neuroscience. Over the last decade, research theories and paradigms from a variety of disciplines have helped outline a putative circuitry involved in emotion regulation posited to aid individuals in coping with negative affective states. Such circuitry involves top-down modulatory influence of regions that may subserve negatively arousing responses (e.g., amygdala). More recently, investigations on the neural basis underlying the control of positive affect have emerged. A potential benefit of regulating positive affect is the ability to change value-specific representations in brain regions such as the striatum to promote more adaptive behaviors (e.g., decreased drug seeking behaviors). In this talk, we will discuss the regulation of negative affect and how this research extends to the control of positive affect and the shaping of goal-directed behaviors driven by positive reinforcers. Finally, we will discuss alternative ways in which individuals may regulate affective responses, particularly via an increased perception of control which may play an important role in buffering an individual's response to environmental stress. |

| 11:00 ~ 11:20 |

|

Raquel Gabbitas An fMRI study on cognitive control following early deprivation |

| 11:20 ~ 12:00 |

Poster Session |

|

| 12:00 ~ 1:00 |

|

Lunch |

| 1:00 ~ 2:00 |

|

Josh Tenenbaum, Invited Speaker Artificial intelligence has made great strides over its 60 year history, building computer systems with abilities to perceive, reason, learn and communicate that come increasingly close to human capacities. Yet there is still a huge gap. Even the best current AI systems make mistakes in reasoning that no normal human child would ever make, because they seem to lack a basic common-sense understanding of the world: an understanding of how physical objects move and interact with each other, how and why people act as they do, and how people interact with objects, their environment and other people to achieve their goals. I will talk about recent efforts to capture these core aspects of human common sense in computational models that can be compared with the judgments of both adults and young children in precise quantitative experiments, and used for building more human-like AI systems. These models of intuitive physics and intuitive psychology take the form of "probabilistic programs": probabilistic generative models defined not over graphs, as in many current AI and machine learning systems, but over programs whose execution traces describe the causal processes giving rise to the behavior of physical objects and intentional agents. Perceiving, reasoning, predicting, and learning in these common-sense physical and psychological domains can then all be characterized as approximate forms of Bayesian inference over probabilistic programs. |

| 2:00 ~ 2:20 |

|

Anna Johnson Cognitive-affective strategies and early adversity as modulators of stress reactivity in children and adolescents |

| 2:20 ~ 2:40 |

|

Nate Powell What was he thinking? The role of rodent PFC on spatial tasks |

| 2:40 ~ 2:50 |

|

Break |

| 2:50 ~ 3:10 |

|

Amanda Hodel Structural Brain Development in Post-Institutionalized Adolescents |

| 3:10 ~ 3:30 |

|

Jason Cowell The Early Antecedents of Inequality Aversion |

| 3:30 ~ 3:50 |

|

Rachel White What would Batman do?: Effects of psychological distancing on executive function in young children |

| 3:50 |  |

Closing remarks |